-

• #2

It seems the forum software does not like multiple uploads with the same file name, so an additional post for screenshots of the demo watchfaces...

Edit: It does work, it does just not like *.bmp files...

-

• #3

I had a quick look. I think it needs a short tutorial, building a very simple example from scratch. EG just a digital clock. They extend by adding steps.

-

• #4

The watch faces there look good - although I guess my concern with something with this is that at some point it may end up being complicated enough that it's more tricky than just writing the watch face directly in JS where there are code comments and you can look up what functions do...

Since you're doing a bunch of work on upload of the zip file, maybe rather than uploading images and JSON separately and decoding that in the app, why not use it to create the JS file for the app straight in the app loader? Then you can avoid the whole 'interpreting' step which I guess is causing you memory and speed problems right now

-

• #5

@HughB

Yes, a tutorial would be useful. Since the file formats are probably going to change, I would hold off on that a bit though.@Gordon

I pushed some commits, which do help on "perceived" performance. There were some bugs on setting intervals and timers and the watchfaces have been drawn very often. The conversion of compressed or b64 image strings to buffers for drawing is now cached, which saves some expensive operations on every refresh. There is still lots of room for improvements. At least figuring out what actually has to be redrawn would help.

The digitalretro watchface needs about half of the available RAM on bangle2, so my focus for now is drawing speed. More because of saved energy due to less calculations, watchfaces are not that dynamic when drawn every minute or even second.

Would creating the script in the browser help with memory? The reading of the resources file and getting it into an object is fast enough, on the order of 80ms. Is the script code accessed directly from flash, or does it get loaded into memory before it is executed? Can I actually avoid getting the image data into RAM until it is needed there for drawing?The idea comes from the format that is created by the unofficial de/compiler for Amazfit BIP watchfaces. I have a watch face I like for my BIP S that is now easy to port. Since there seem to be thousands of these unofficial watchfaces for BIP S, maybe there are some that are worth porting over to a similar format, but not worth creating a dedicated app for every single one. Porting would be for the most part slight structural changes to the decompiled json. Probably most of that can be automated.

-

• #6

Would creating the script in the browser help with memory?

Yes, if you're not having to load a bunch of JSON, but instead the 'draw' function just has a bunch of draw commands I think that will help a lot.

needs about half of the available RAM on bangle2

While that's fine, that is still quite a lot - 10 times as much as some watchfaces.

Is the script code accessed directly from flash,

Worth checking http://www.espruino.com/Code+Style#other-suggestions but yes - functions have their code stored in flash memory and are executed from there.

Can I actually avoid getting the image data into RAM until it is needed there for drawing?

If the images are stored as binary files in Storage, you can actually render them direct from flash -

g.drawImage(require("Storage").read(...))unofficial de/compiler for Amazfit BIP watchfaces. I have a watch face I like for my BIP S that is now easy to port

Now that would be amazing. If you could making it compatible with the watch face compiler format then we could suddenly get loads of cool bip watchfaces!

-

• #7

While that's fine, that is still quite a lot - 10 times as much as some watchfaces.

The simpleanalog one has more like 15%, which would be about the bare minimum for something useful. The digitalretro watchface is aimed at being a feature complete demo and has nearly all possible weather codes covered which are currently 48 images at 63*64 pixels.

if the images are stored as binary files in Storage,

Since I don't want to clutter the file system with 50-100 files, maybe writing offset and lengths to the json resources file and only the actual pixel data into one big concatenated "image" file would be a reasonable way? Reading directly should still be possible using offset and length parameters.

-

The Amazfit decompiler creates a very similar json, but it numbers all extracted images in series and references them with a starting image and a count. Thats bad for doing changes before recompiling, since inserting an image means renaming all files after it and changing all references. But it is probably easy enough to either automatically convert the referenced "blocks" of images to directories for the new format or support this naming scheme optionally. Both ways should lead to at least somewhat working automatic ports. Changes would still need manual modifications. At that point renaming the files and using the hierarchical structure would be better. The current feature set is probably enough for some of the watch faces, but there are still features left.It seems there was development on the Amazfit side of things, since I last tried that. The last version of this editor that I tried was completely unusable and broken. That seems to be a lot better now. Actually the format seems to have had some changes too, will have to take a closer look.

https://v1ack.github.io/watchfaceEditor/ -

• #8

pixel data into one big concatenated "image" file would be a reasonable way?

Yes, that'd work. If the images are the same size/bit depth you can also use the 'frame' argument to

drawImageto pull out just that image frame.Being able to just directly load up an Amazfit zip would be great - especially if there's actually a proper graphical editor for them now...

-

• #9

I have just pushed changes to convert a good part of the amazfit features. There is still some fine tuning to do, but there are watchfaces that convert pretty nice. The screenshots were made by taking watchfaces from https://amazfitwatchfaces.com/ and the decompiler from the Help page on https://v1ack.github.io/watchfaceEditor/.

Reading all image data from a file using length and offset keeps RAM usage manageable, but now rendering speed remains a big problem. The more complex watchfaces take up to 1,6 seconds for one rendering pass. Thats way to slow to show for example seconds when unlocked and eats battery like there's no tomorrow.

@Gordon: Any ideas how to get some extra speed from the rendering?

I have tried rendering everything to a buffer and then writing the result to the display, but that takes even longer since I need to write the full resolution not only for the background, but also an additional time when everything else has been rendered to the buffer. It seems rendering to the off-screen-buffer is comparable in speed to directly rendering to the display. Drawing a full screen at 3bpp alone takes about 95ms.

4 Attachments

-

• #10

Do you have an example app, as uploaded, that I could take a look at?

It's hard to say anything useful without actually taking a look at the code and seeing what it's doing.

-

• #11

I have found 2 huge improvements:

- Collapsing the tree into a flat array on the browser side saves about 50% rendering time on the watch. That might complicate further savings using partial redraws, but that's currently just an idea.

- Using g.transformVertices for drawing the rotated analog vector hands. About 30% faster while drawing those.

I have added expensive code for tracking time, so the absolute times are inflated a lot by that. The overview of the tracked times can be printed with printPerfLog(). With deactivated time tracking it is still a bit to slow for amazfit watchfaces with seconds. It however is a lot closer to sub second rendering than before.

... drawImage last: 88 average: 86 count: 2 total: 173 drawIteratively last: 282 average: 282 count: 1 total: 282 drawIteratively_handling_Image last: 97 average: 99 count: 2 total: 198 drawIteratively_handling_Poly last: 62 average: 62 count: 1 total: 62In this example the two drawIteratively_handling_Image take 25ms longer than the two drawImage call they wrap. The logging uses about 8ms per stored element (

startPerfLogandendPerfLogcombined).

It seems the remaining 4.5 ms per call have been used by anifchecking an object property, aswitchstatement and the function call to mydrawImagefunction.

Is that expected? 4.5 ms at 64MHz would be about 280k instructions, that seems somewhat excessive to me 😉.

1 Attachment

- Collapsing the tree into a flat array on the browser side saves about 50% rendering time on the watch. That might complicate further savings using partial redraws, but that's currently just an idea.

-

• #12

Hi,

I don't have much time today, but just some things I've seen quickly:

if (resource.file){ result.buffer = E.toArrayBuffer(atob(require("Storage").read(resource.file)));- you should really store the image as binary - so write what's in the file withatobin the write call, so that when you read you don't have to callatoband it's not having to read the whole image into RAM and then convert base64. it'll take up less space too.- Same with compressed - in most cases it's going to be far faster to just read the image uncompressed I imagine since you're not having to load the whole thing into RAM

I'd mentioned above about it being so much better to use your customiser app to just put all the draw calls together rather than using switch statements. What you're doing right now is making an interpreter (for your JSON files) inside the Espruino JS interpreter - so cycles are being thrown away.

So instead of:

for (var current in element){ // ... switch(current){ //... case "MultiState": drawMultiState(currentElement, elementOffset); break; case "Image": drawImage(currentElement, elementOffset); break; case "CodedImage": drawCodedImage(currentElement, elementOffset); break; case "Number": drawNumber(currentElement, elementOffset); break; case "Poly": drawPoly(currentElement, elementOffset); break; case "Scale": drawScale(currentElement, elementOffset);Do that inside your customiser and instead just write JS directly that looks like:

drawCodedImage(element[0], elementOffset); drawMultiState(element[1], elementOffset); drawPoly(element[2], elementOffset);You could even do that in JS inside the app so your customiser doesn't have to change (create a set of draw commands in a string and then 'eval' it)

Worth looking at http://www.espruino.com/Performance but Espruino executes the JS from source. That means that if you have a switch statement it's having to parse through all the code every time it handles the switch - so yes, it'll be slow.

You could see how we handle it in the Layout library to run through things quick - add the functions to an object, and then just index that object to find the right function to run: https://github.com/espruino/BangleApps/blob/master/modules/Layout.js#L346-L396

But really it's much better to avoid that completely if you can.

-

• #13

Thanks Gordon. I had expected that implementing the code generation would be complicated, but actually it was relatively easy, since the flattening of the tree was already there. Some results with different watchfaces:

simpleanalog No data file, tree: 420ms Tree: 440ms Collapsed: 190ms Precompiled: 170ms digitalretro No data file, tree: 2830ms Tree: 2830ms Collapsed: 1490ms Precompiled: 1366ms gtgear No data file, tree: LOW_MEMORY,MEMORY Tree: 2020ms Collapsed: 1150ms Precompiled: 1060msPrecompiling the watchface to draw calls gets about 10% reduction in drawing time after reducing the tree down to an array. Storing all images directly as binary string does not do a lot, on the order of single digit milliseconds. But every little thing counts.

Would you say replacing a switch with something like this generally would be faster?

function doA(p){} function doB(p){} for (var c of items){ switch(c.name){ case "A": doA(c.param); break; case "B": doB(c.param); break; } } // faster than the switch? for (var c of items){ eval('do' + c.name + '(' + c.param + ')'); }Absolutely catastrophic security-wise, but probably not really problematic for the bangle. If people start using watchfaces from wherever, maybe input sanitation would be in order.

Maybe drawing parts of the clock (digital time, weather, status icons) into arraybuffers in an event driven way and only compositing those together on every draw could be faster? At least digital time would only be refreshed once a minute instead of every call like now. Parts could individually change at their own speed without beeing actually redrawn on every refresh. Instead there would be the compositing overhead on every draw...

-

• #14

What about something like this?:

var dofns = { "A":function(p){}, "B":function(p){} }; for (var c of items) { if (dofns[c.name]) (dofns[c.name])(c.param); else doerror(); } -

• #15

I like that, seems safer than my eval() approach. Probably a tiny bit slower because of the lookups done for the property names, but should be faster than switch. It seems close to what Gordon had mentioned from the layout library.

-

• #16

Great! A definite improvement anyway. I'm not sure how far you've gone (including

drawScaleas I suggested or going even further to just includingg.drawXYZcalls) but definitely the further you can go the better.Yep, I'd say what @andrewg_oz says above for speed.

Maybe you could do a check on

itemsonce at startup (if you feel you need it) then skip the check each time your render. If there's an issue you'll still get an exception, it just won't be as verbose. I've not benchmarked but I would guess that:items.forEach(c=>dofns[c.name](c.param))Might be marginally faster.

Maybe drawing parts of the clock (digital time, weather, status icons) into arraybuffers in an event driven way and only compositing those together on every draw could be faster?

Yes, that's a tricky one. If you knew the outline you could use

g.setClipRectto restrict drawing just to the clock area and then refresh just that bit.IMO you might be able to find quite a lot of speed just by modifying how things get compiled down though (eg if you know it's a binary uncompressed image you can just draw that and skip all the checks)

-

• #17

I have actually removed all possibilities for image resources but uncompressed binary, since the other types are not really beneficial for anything. That shaves another couple of percent of the draw time.

I had not seensetClipRectyet, that could be really useful to draw partially, without having to split the background in several parts. Splitting the background would be possible for native watchfaces, but for the automatic amazfit conversion probably not that easy since there are no bounds to image sizes.

Is it correct, that I could set a ClipRect and then just drawImage with the full screen size background and have it only touch the ClipRect pixels and be faster than drawing without ClipRect? That would be an awesome alternative to drawing into Arraybuffers and compositing them together. -

• #18

Is it correct, that I could set a ClipRect and then just drawImage with the full screen size background and have it only touch the ClipRect pixels and be faster than drawing without ClipRect?

Yes... although it may not be as much faster as you expect so I'd test. While offscreen bitmaps generally get ignored, Espruino may still end up going through every pixel and just not drawing it for some images

-

• #19

Edit: Spoken too soon... Had inadvertendly compared execution from RAM via IDE with running from flash... Still, a few ms can be gained.

Moving up the scopes when using global variables seems extremely expensive. I have changed to giving the resource definition as function parameter instead of using the globally defined variable and the digitalretro watchface went from 1360ms to about 970ms, so about 30% faster 😱

The performance page in the documentation says that globals are slower to find, but finding out by how much suprised me. -

• #20

The performance page in the documentation says that globals are slower to find, but finding out by how much suprised me.

It depends on your app, but if you think about it, it's got to look back at every point along the scope, searching all function vars, then vars in the scope that function was defined in, and so on...

I did have plans for a cache to speed it up, but because it's JS there are all kinds of nasty edge-cases that mean that while it'd work in virtually all cases, you could come up with some code that broke it - and one thing I've found with Espruino is if there's an edge-case, someone will find it sooner or later and complain :)

-

• #21

someone will find it sooner or later and complain

that would be me :P

-

• #22

I have managed to add some features and squeeze some additional performance out of the watchface. Using it daily is quite possible now. To do that, I had to render part of the watchface to a buffer, to be able to overlay it with analog hands without having to redraw the whole thing on every refresh.

I did however not manage to create a working solution with a buffer bit depth other than 16 bit. I expected 4 bit to be enough for Bangle 2, but that garbled all colors.

I have also tried using 8 bit color, but had the same problem as with 4 bit, just other wrong colors.

In essence I am trying to do something like this:var img16 = { width : 16, height : 16, bpp : 16, transparent : 1, buffer : require("heatshrink").decompress(atob("AA//AA34gABFgEfAIwf/D70H/4BG8ABF/EfAIv/8ABFD/4ffgEQAIsEgABFwABGwgBGD/4ffwkfAIuH8ABF/kQAIv/+ABFD/4ffA"))}; var img3 = { width : 16, height : 16, bpp : 3, buffer : require("heatshrink").decompress(atob("gEP/+SoVJAtNt2mS23d2wFpA"))}; var b = Graphics.createArrayBuffer(176,176,8); b.drawImage(img16,0,0,{scale:5});//top left in buffer b.drawImage(img3,0,96,{scale:5});//bottom left in buffer g.drawImage(b.asImage()); g.drawImage(img16,96,0,{scale:5});//top right direct g.drawImage(img3,96,96,{scale:5});//bottom right directChanging the bit depth of the

bbuffer to 16 makes this example work just fine. I could not get it to work correctly for 8 bit.

4 bit color with a transparent color defined would use the minimum possible amount of RAM, but that probably needs a palette and matching conversion of the images used in the watchface?

If a 4 bit palette for drawing the contents ofbtoggives me correct colors, does dithering of images with bigger bit depths still work when drawing to the 4 bit buffer?

3 Attachments

-

• #23

The dithering will only work when drawing to the LCD itself as that's where it is handled, so if you draw to a graphics instance you won't get it.

I guess the colors are garbled because when drawing a 4bpp image it's treated as a Mac palette? I guess you could either specify a palette when you draw it, or maybe pre-convert all images you're rendering to 4 bit Mac palette ones?

-

• #24

Thanks, I have experimented using a palette. In this commit the palette is created to match what the image converter does for 3bpp RGB and 4bpp RGBA images. That seems to work fine for my purposes. I assume the image converter implicitly always uses the same "palette" when doing 3bpp or 4bpp images?

This palette has only 8 colors used and a lookup table is used to get the matching color for drawing on the buffer directly with correct color.

Does the buffer bit depth impact the performance of drawing operations? Perhaps more/less bit shifting at certain depths? -

• #25

I assume the image converter implicitly always uses the same "palette" when doing 3bpp or 4bpp images?

Yes, should do...

Does the buffer bit depth impact the performance of drawing operations?

Not much... Some will be a lot faster (8bpp?) if there's a 'fast path' implemented but on the whole they all use the same code and I don't think you'll see much difference

halemmerich

halemmerich HughB

HughB Gordon

Gordon

andrewg_oz

andrewg_oz d3nd3-o0

d3nd3-o0

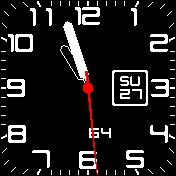

I am developing a highly customizable watch face. The idea is to define the watchface entirely in a json file based on displaying images from a resource file. The images are converted from a folder structure into an json resources file and are then referenced from the watchface file. Watchfaces can be zipped and loaded as one file or the folder can be used directly if the browser supports that. Conversion happens in the browser, so no additional tools needed for installing/converting watchfaces. Using chromium on Arch linux works fine for me.

You can try it out at https://halemmerich.github.io/BangleApps/?id=imageclock .

There are two example watchfaces you can download from the customization page and try out. File format and features are describe in the README, but the whole thing is far from finished.

The main problem at the moment is the memory use and relatively slow drawing. There is close to no performance optimizations yet. Ideas on how prevent having all images in memory at the same time would be appreciated. I would prefer not to write them all into dedicated files, as that would clutter the storage. Maybe parsing the json SAX-style is possible?

What do you think, are there features you would like to have in something like this?

2 Attachments